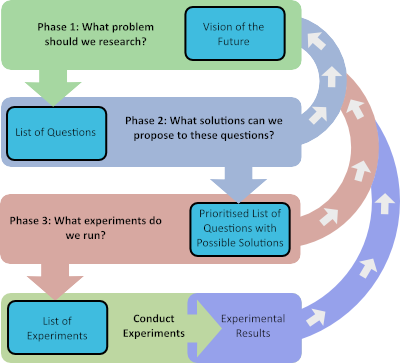

Placing Empirical Research into the Roadmap

It is so easy to get carried away with a new technology. It can be so much fun playing with it, learning about how it works and just trying it out that we can often forget why we are investigating it in the first place. That is why it is important to have a plan, a key set of criteria with which to assess the technology you are playing with. This is the 3rd phase of the research roadmap process.

So far, we’ve covered a lot of ground. We’ve introduced the research roadmap process and described the first phase in detail, working out how to create a vision of the future and how global trends can affect and help predict that. In the second phase we showed how these visions of the future can be translated into open questions. We even saw how these open questions resolve themselves into three basic types of problems:

- Problems that are already solved

- Where there are one or more solutions possible

- Where there is no solution at all

Obviously, if there is already a well-known solution to the problem we can simply move on and study the remaining questions. If you recall both Edison and Swan faced a problem with creating a vacuum for their light bulb; both used a solution which had already been produced by a German inventor, the Sprengel pump. In a similar way, if there is a workable solution then move on. The value is in addressing the more challenging questions.

Address the Challenging Questions

In this blog post we are going to cover how we structure our research to address the latter two bullet points; those cases where there are multiple solutions or the rare instances when there are no solutions at all.

General Approach to Not Getting Distracted

Keeping focused on why we are doing the research is essential. This is a problem for a lot of researchers, so much so that Dr Peyton-Jones, who is a Microsoft Researcher based in Cambridge, has shared a slide deck he’s prepared for fellow researchers to help address this issue (which I’ll talk more about further down).

Within the academic research world, the major output of any research will be an academic paper. Academic papers are often used as a measure of success. Researchers and their institutions are often evaluated on the number of papers they have published and the relevance of their research is assessed by the number of citations their papers receive from other research papers. These same metrics are also used by government agencies; for instance SFI (Science Foundation Ireland) uses these metrics to measure the success of the academic intuitions within the country.

Most researchers approach research in the following way:

- Define research direction / idea / questions to investigate

- Do investigation

- Write up results in a paper

Dr Peyton-Jones suggests we flip the last two steps and focus on writing the paper first, then conducting the research. Why? – Well, because when we are writing the paper, we are forced to keep ourselves focused. Academic papers usually follow a standard structure; context and background research, problem presentation, experiment definition – solution, results and conclusion. By working with this structure in mind we are forced to answer these questions and keep ourselves focused.

However, during the investigation, it is so easy to discover something new along the way and find your time and research spinning off in a direction you hadn’t originally intended.

Evaluating Multiple Solutions

As I mentioned in the last post, when there are multiple solutions available the empirical research becomes focused on technology selection. It is often the case that technology originally built for one purpose can be used for a different purpose. Naturally this can produce a situation where the technology is tailored for an original set of criteria and doesn’t exactly match the criteria for the problem we are currently addressing. Typically, when we see that there are multiple possible solutions to one of the problems, we’ve encountered it is because the candidate solutions have come from adjacent industries or problems.

Technical Evaluation

As always it is important to have defined the criteria for the experiment. To do this, it is necessary to identify which aspects of the technologies you are evaluating are important to the problem being addressed. If you recall in the previous post, I talked about defining the questions, well here we need to refer back to the questions and refine them to produce a qualifying metric. Often this requires going back to the scenarios and looking for additional detail.

Let’s take an Internet of Things example. Imagine selling a smart temperature sensor to a customer. They place this sensor in their home and connect it to their home Wi-Fi. Many of us have done this with Google Home, Amazon Alexa or even Smart TVs. Typically, these devices reach out to a server in the cloud when we interact with them. But we need our smart sensor to work the other way - we reach out to it from the cloud. Typically, this type of communication is blocked so we need some way around this. There are a whole range of solutions and each has its “pros” and “cons” and, in order to evaluate them, we need to focus on the set of “pros” and “cons” we care about the most.

In the example above this could include factors like:

- The load on the cloud server

- The amount of additional (non-application) data that is transferred

- The latency (time from cloud requesting information from the sensor and the sensor replying)

- The goodput – the speed of throughput of application specific data

In this case we would need to go back to the original scenario and really understand what problem the technology in the scenario was addressing or what benefit it was providing. This will point us to the key metrics we need to monitor. A sensor deployed to monitor environmental temperature is unlikely to see sudden, dramatic shifts in the temperature it monitors. However, a temperature sensor deployed on say a gas furnace very much might. If the sensor is being used as part of a fire alarm then the time from the sensor observing a spike in temperature and the alarm going off is important, so latency would key. Only once we’ve really delved into the details can we determine the key metrics

Filtering the Solutions

The technical merits of a solution also need to be considered together with the commercial merits of a solution. This is particularly true when Open Source software is concerned. The solutions we propose, particularly those that originate outside of our organisation, need to be commercially deployable. Imagine that your livelihood and that of your family relied on the technology you’re suggesting. If you want your organisation to invest in your proposal then the following is what is going to happen.

Commercial Requirements and Open Source Software

For any organisation, taking on a commitment to use any piece of software is a risk. Imagine starting to use an open source library only to discover that the library is no longer maintained and there is a security flaw within it. These are the types of risks that organisations need to avoid and to do this we normally look for a set of qualifying points:

- How long has the project been running?

- Which other organisations are using it?

- Has it been commercially deployed already?

- What is the frequency of updates to the project?

Obviously, some emerging projects will not meet all the criteria, in which case an organisation may take on the maintenance of a project or seek to fund someone who already is. This depends on how critical the project is to the organisation.

Creating a Solution

As I mentioned in my prior post, there are times when no suitable solution can be in found, in these cases it is necessary to examine the possibility of creating your own solution. Coming from a software development background this used to be my default go to response – “Yes, let’s build it!”. But the cost of software development isn’t just in the time it takes to built it, but the lifetime cost to maintain and support that software across the duration of a products lifecycle.

Search, Search and Search Again

For us to have arrived at the decision that there is no other viable solution available, implies that we have done our homework and looked at alternative solutions. If you can’t find an alternative, ask around - ask colleagues or on message boards. The chances are that if your organisation needs a solution someone else may need it too. I highly recommend that you double check; even if you uncover a solution that doesn’t quite match your needs, you can learn from what others have done.

Creating a Specification

Before designing the solution be a specific as you can. If you’ve been searching and comparing existing solutions you should have these details to hand; if not, now is the time to go and research them. What does the solution need to do? – Precisely. If we think back to our temperature sensor example, what should our targeted data transfer rate be? How many bytes per second? What should the goodput be? How many bytes per second could that be? How long is the acceptable latency in milliseconds?

It is important to dig out all these details before embarking the creation of a solution.

Looping Back into the Research Roadmap

The results of any experiment, or attempt to create a solution, provide important information which can be fed back into the research roadmap.

While we as industrial researchers are trying to find working, sellable solutions to problems, it is sometimes the case that our experiments will disprove a hypothesis, or, that attempts to create a solution result in an unsuccessful effort. That is all valuable data. Whether the experiment or solution creation effort showed a technology was a perfect fit, a close fit, or not a fit at all, then we should feed that information back into our vision of the future. This helps refine and update the vision of the future and the path it will take. While the technology we study or attempt to create may not fit our needs, other advances in the future may mean that we can revisit this at another time. But for now, we need to update the vision of the future and consider an alternative vision; a tweaked and updated one.

This is the last in the mini-series explaining the research roadmap process. I hope you’ve enjoyed it and found it interesting. If you’d like to read or hear more, or have questions, please don’t hesitate to reach out to me via twitter @mcwoods, or LinkedIn.